Short-Time Fourier Transform for deblurring Variational Autoencoders

Personal research project

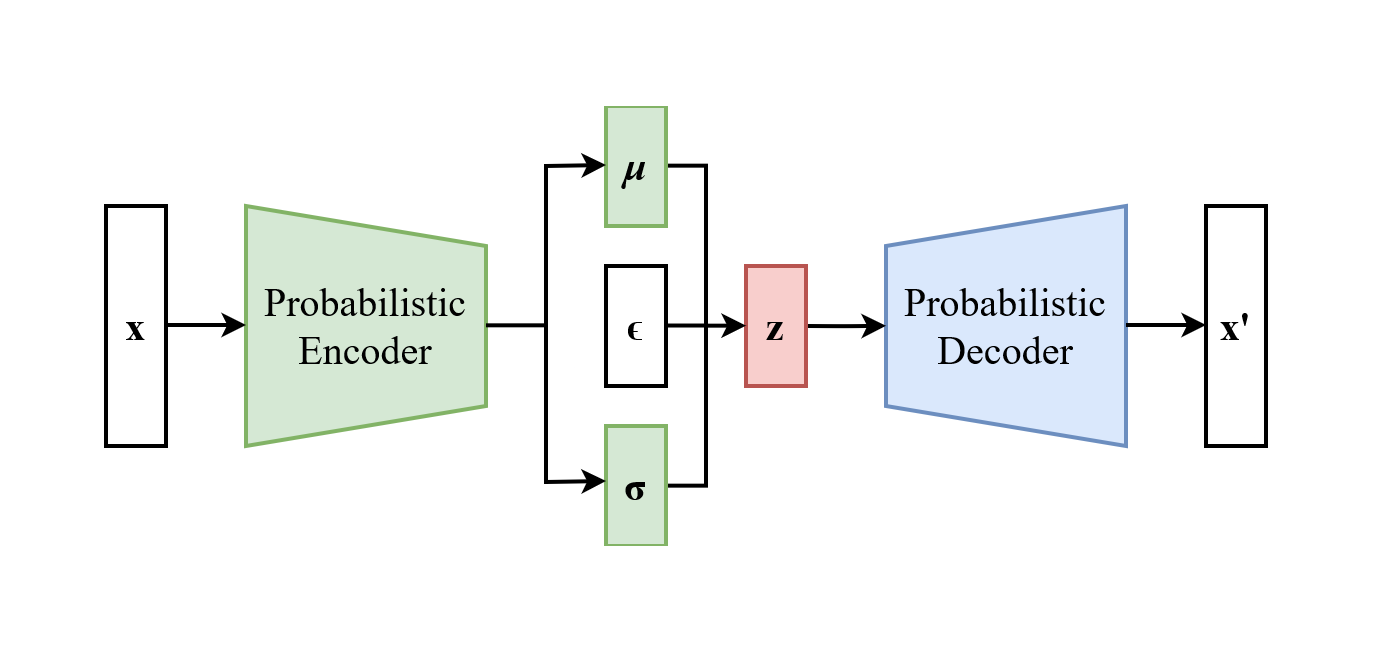

Variational Autoencoders (VAEs) are powerful generative models, with strong probabilistic fonudations. However their generated samples are known to suffer from a characteristic blurriness, as compared to the outputs of alternative generating techniques. Extensive research efforts have been made to tackle this problem, and several works have focused on modifying the reconstruction term of the evidence lower bound (ELBO). In particular, many have experimented with augmenting the reconstruction loss with losses in the frequency domain. Such loss functions usually employ the Fourier transform to explicitly penalise the lack of higher frequency components in the generated samples, which are responsible for sharp visual features. In this paper, I explore the aspects of previous such approaches which aren’t well understood, and propose an augmentation to the reconstruction term in response to them. The reasoning laid out in the paper hints to use the short-time Fourier transform and to emphasise on local phase coherence between the input and output samples. I illustrate the potential of my proposed loss on the MNIST dataset by providing both qualitative and quantitative results.

Access the research manuscript uploaded to Arxiv here.

Access the code repository on Github here.