Visual Saliency Map Translation

Personal research project

During my research internship at DREAM Lab (University of Illinois Urbana-Champaign), I collaborated with Dr. Haohan Wang on a project aimed at enhancing the interpretability of Convolutional Neural Networks (CNNs). Like other deep neural networks, CNNs suffer from a lack of interpretability and transparency, making it difficult to understand the rationale behind their predictions—a phenomenon often likened to a ‘black box’. This lack of interpretability poses risks when deploying CNNs for critical tasks, as it is challenging to predict when or why the model might make an inaccurate prediction. The study of improving interpretability in machine learning models falls under the field of Explainable AI, in which Dr. Haohan Wang is a leading researcher.

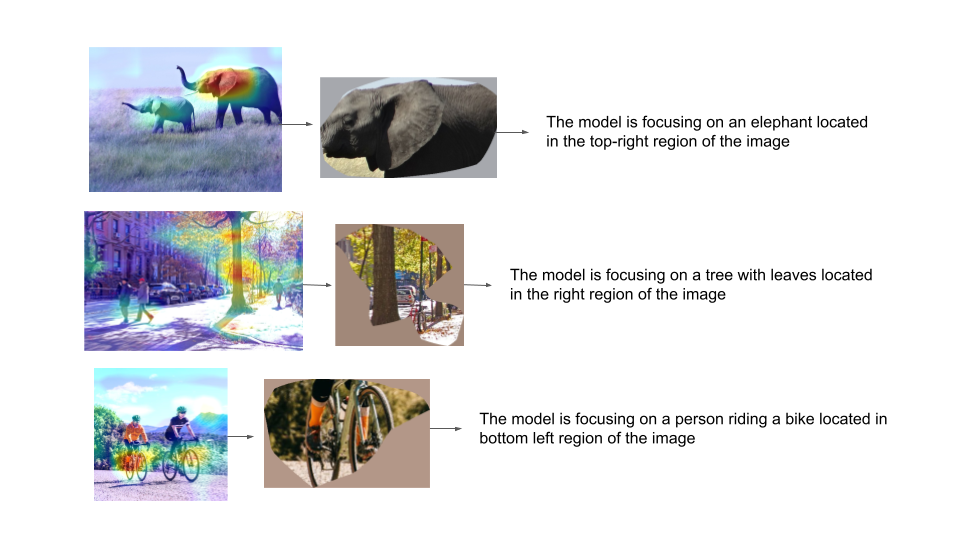

The Visual Saliency Translator we designed consists of two key components:

- A visual saliency map generator, which identifies the regions of the input image most relevant or important to the model’s prediction.

- An image-to-text model, which takes the output of the visual saliency generator and produces a textual explanation of the visual saliency map.

We experimented with the family of GradCAM models as our visual saliency map generators. These models, given a CNN and an input image, produce a heatmap that highlights the crucial regions of the image in relation to the CNN’s predictions. For the image-to-text model, we used the BLIP model. Examples of the final results from the entire pipeline are illustrated at the top.

Access the code repository on Github here.

Access the Google Slides document, which contains the project elements, here.