publications

2025

-

Formalising Propositional Information via Implication HypergraphsV. Dalal2025

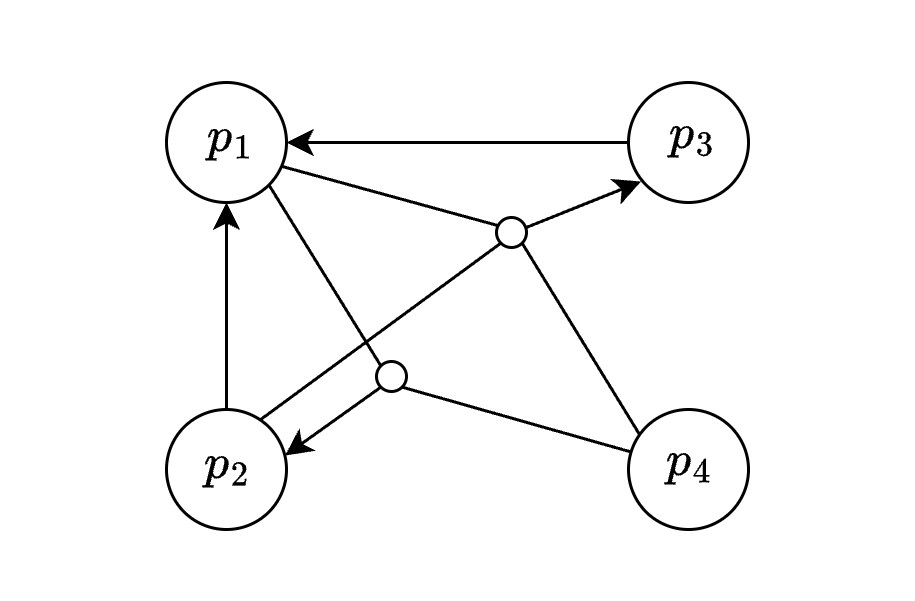

Formalising Propositional Information via Implication HypergraphsV. Dalal2025This work introduces a framework for quantifying the information content of logical propositions through the use of implication hypergraphs. We posit that a proposition’s informativeness is primarily determined by its relationships with other propositions – specifically, the extent to which it implies or derives other propositions. To formalize this notion, we develop a framework based on implication hypergraphs, that seeks to capture these relationships. Within this framework, we define propositional information, derive some key properties, and illustrate the concept through examples. While the approach is broadly applicable, mathematical propositions emerge as an ideal domain for its application due to their inherently rich and interconnected structure. We provide several examples to illustrate this and subsequently discuss the limitations of the framework, along with suggestions for potential refinements.

@misc{dalal2025formalisingpropositionalinformationimplication, title = {Formalising Propositional Information via Implication Hypergraphs}, author = {Dalal, V.}, year = {2025}, eprint = {2502.00186}, archiveprefix = {arXiv}, primaryclass = {math.LO}, url = {https://arxiv.org/abs/2502.00186}, } -

Deep video anomaly detection in automated laboratory settingA. Dabouei*, JP. Shibu*, V. Dalal*, C. Cao, and 3 more authorsExpert Systems with Applications, 2025

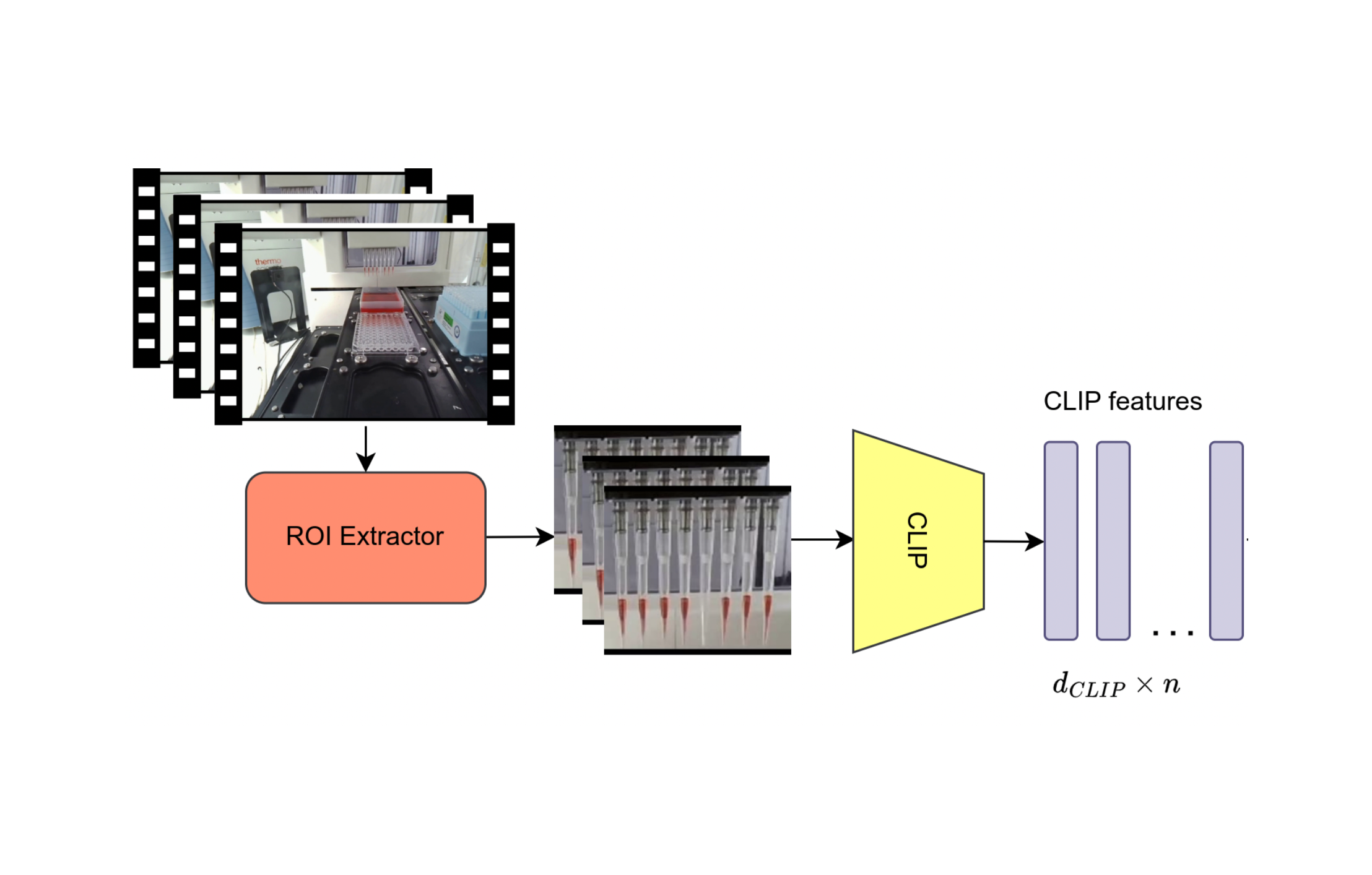

Deep video anomaly detection in automated laboratory settingA. Dabouei*, JP. Shibu*, V. Dalal*, C. Cao, and 3 more authorsExpert Systems with Applications, 2025Laboratory automation integrates robotics, machine learning, and computer vision to enhance precision and efficiency while reducing costs. Despite its pivotal importance in fully automated experimentation, automatic monitoring of procedures is an overlooked task. In this paper, we aim to address this shortcoming by developing a learning method for detecting anomalies in the laboratory setting. To formalize the problem, we focus on the liquid transfer task as a major task in laboratory automation, given the frequent need for reagent mixing and solution preparation. We introduce a novel video anomaly detection framework that leverages the robust CLIP features in conjunction with a transformer encoder. Through an array of experiments and ablation studies, we demonstrate that our proposed method surpasses current state-of-the-art anomaly detection techniques adapted to laboratory automation by a notable margin of over 11%, achieving an impressive AUC of 98.79% for video-level anomaly detection.

@article{DABOUEI2025126581, title = {Deep video anomaly detection in automated laboratory setting}, journal = {Expert Systems with Applications}, pages = {126581}, year = {2025}, issn = {0957-4174}, doi = {https://doi.org/10.1016/j.eswa.2025.126581}, url = {https://www.sciencedirect.com/science/article/pii/S0957417425002039}, author = {Dabouei*, A. and Shibu*, JP. and Dalal*, V. and Cao, C. and MacWilliams, Andy and Kangas, Joshua and Xu, Min}, keywords = {Laboratory automation, Anomaly detection, Liquid transfer, Video analysis, Transformer, Deep learning}, }

2024

-

Dataset Distillation via the Wasserstein MetricH. Liu, Y. Li, T. Xing, V. Dalal, and 3 more authors2024

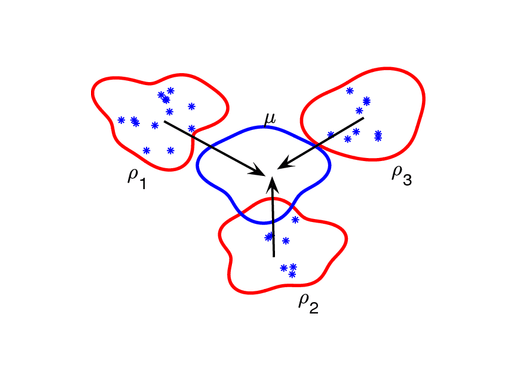

Dataset Distillation via the Wasserstein MetricH. Liu, Y. Li, T. Xing, V. Dalal, and 3 more authors2024Dataset Distillation (DD) emerges as a powerful strategy to encapsulate the expansive information of large datasets into significantly smaller, synthetic equivalents, thereby preserving model performance with reduced computational overhead. Pursuing this objective, we introduce the Wasserstein distance, a metric grounded in optimal transport theory, to enhance distribution matching in DD. Our approach employs the Wasserstein barycenter to provide a geometrically meaningful method for quantifying distribution differences and capturing the centroid of distribution sets efficiently. By embedding synthetic data in the feature spaces of pretrained classification models, we facilitate effective distribution matching that leverages prior knowledge inherent in these models. Our method not only maintains the computational advantages of distribution matching-based techniques but also achieves new state-of-the-art performance across a range of high-resolution datasets. Extensive testing demonstrates the effectiveness and adaptability of our method, underscoring the untapped potential of Wasserstein metrics in dataset distillation.

@misc{liu2024datasetdistillationwassersteinmetric, title = {Dataset Distillation via the Wasserstein Metric}, journal = {ICCV 2025}, author = {Liu, H. and Li, Y. and Xing, T. and Dalal, V. and Li, L. and He, J. and Wang, H.}, year = {2024}, eprint = {2311.18531}, archiveprefix = {arXiv}, primaryclass = {cs.CV}, url = {https://arxiv.org/abs/2311.18531}, } -

Short-Time Fourier Transform for deblurring Variational AutoencodersV. Dalal2024

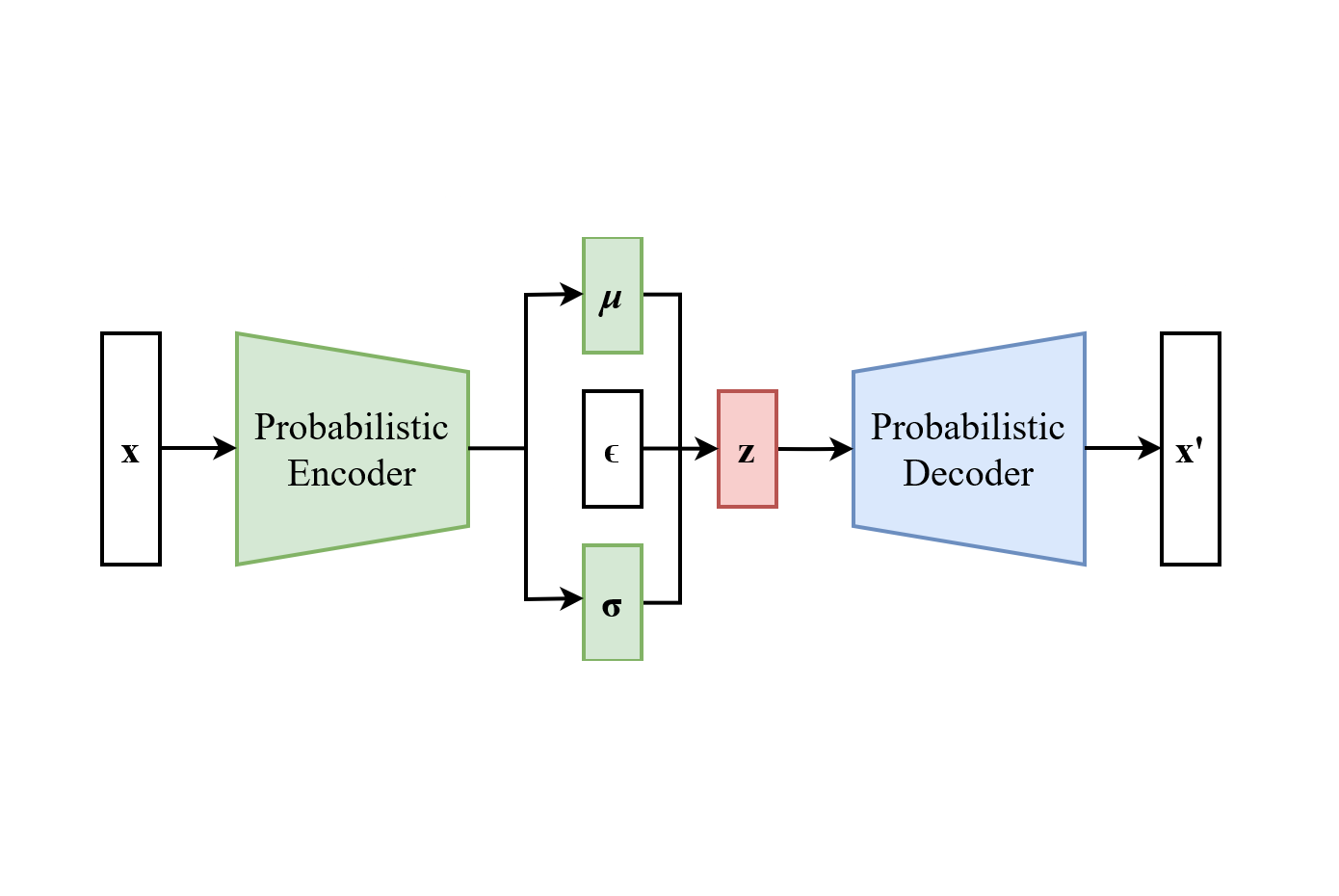

Short-Time Fourier Transform for deblurring Variational AutoencodersV. Dalal2024Variational Autoencoders (VAEs) are powerful generative models, however their generated samples are known to suffer from a characteristic blurriness, as compared to the outputs of alternative generating techniques. Extensive research efforts have been made to tackle this problem, and several works have focused on modifying the reconstruction term of the evidence lower bound (ELBO). In particular, many have experimented with augmenting the reconstruction loss with losses in the frequency domain. Such loss functions usually employ the Fourier transform to explicitly penalise the lack of higher frequency components in the generated samples, which are responsible for sharp visual features. In this paper, we explore the aspects of previous such approaches which aren’t well understood, and we propose an augmentation to the reconstruction term in response to them. Our reasoning leads us to use the short-time Fourier transform and to emphasise on local phase coherence between the input and output samples. We illustrate the potential of our proposed loss on the MNIST dataset by providing both qualitative and quantitative results.

@misc{dalal2024shorttimefouriertransformdeblurring, title = {Short-Time Fourier Transform for deblurring Variational Autoencoders}, author = {Dalal, V.}, year = {2024}, eprint = {2401.03166}, archiveprefix = {arXiv}, primaryclass = {eess.IV}, url = {https://arxiv.org/abs/2401.03166}, }